Until now, we have developed our Infrastructure code as a single developer. As a result, our state file is created and maintained on the local development computer. This is fine for a team of one, but having multiple versions of a state file can become an issue as others join in. This post and accompanying video examines using a remote backend state on Azure Storage to host shared state files.

This post and video is a continuation of my series on Terraform. Check the link below for a list of other articles in this series.

https://www.ciraltos.com/category/terraform/

The Terraform state maps real-world resources to the configuration, it tracks metadata, such as dependencies between resources, and improves performance for large infrastructure by caching information on resources in the state.

How do we address potential issues when working in a team to deploy infrastructure as code? We use a centralized state file that everyone has access to. Terraform supports storing a remote state in Terraform Cloud, Amazon S3, Azure Blob Storage, Google Cloud Storage, Alibaba Cloud, and more. These remote states can be accessed and shared by multiple people.

As this series is on Terraform with Azure, we will use Azure Blob Storage. There are two steps to follow. First, we need to create a storage account. Second, we configure the main.tf to use the remote state location.

Create the Storage Account

One thing to note before we get started, we are not using Terraform to create the storage account. Terraform could be used, it will work the same. The remote state is stateful, meaning the data needs to persist through the lifecycle of the code. We can’t simply delete and recreate the storage account without removing the state file. Because of that, this example uses the Azure CLI.

The images below are missing parts of the commands. All code used in this example can be found here:

https://github.com/tsrob50/TerraformExamples

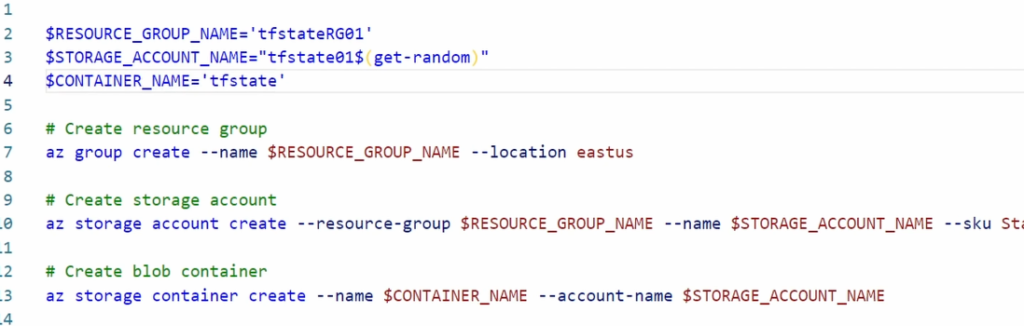

Start by connecting to Azure and set the resource group name, storage account name, and container name variables in the storage setup script. Once updated, highlight and run the lines to add the variables to memory.

get-random adds random numbers to the end of the storage account to ensure the name is unique.

Run the az group create, az storage account create, and az storage container create commands to create the resource group, storage account, and the blob container used for the state file.

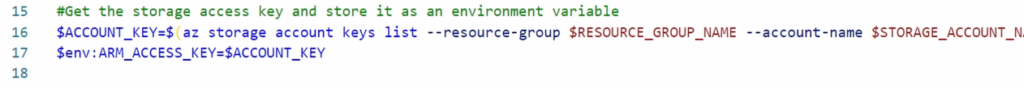

Terraform needs rights to access the storage account when running the terraform init, plan, and apply commands. We will use the storage account key for this. We could add the key to the main.tf file, but that would go against best practices of keeping security string out of code. Instead, we’ll use an environment variable to pass in the string when running commands. The variable $env:ARM_ACCESS_KEY will get the security key. Run the commands below to add the key to the environment variable.

This example uses the environment variable, but there are other options for hosting the Storage Account key, such as Azure Key Vault. Keep in mind that anyone deploying code will need to set the environment variable to access the storage account.

Create the Backend State

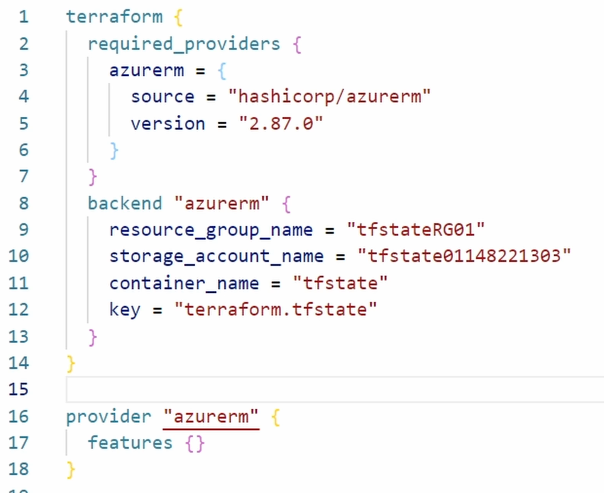

Now that we have created the backend state location, we can configure Terraform to use it. The configuration is set in the terraform block of the main.tf file. Add the following code into the storage block.

backend “azurerm” {

resource_group_name = ” <storage_account_resouce_group>”

storage_account_name = “<storage_account_name>”

container_name = “<storage_container_name>”

key = “terraform.tfstate”

}

Start with the backend configuration in the terraform resource block. Give it a local name, azurerm, for the example below.

Provide the storage account resource group name, storage account name, and container name.

Provide a key. The key is the name of the state file. This example uses terraform.tfstate. Don’t confuse Key in the backend block for the storage account key.

Once finished, the terraform block will look similar to the image below:

The configuration above will direct the state file to the shared Azure Storage Account, where it will be available for others working on the infrastructure.

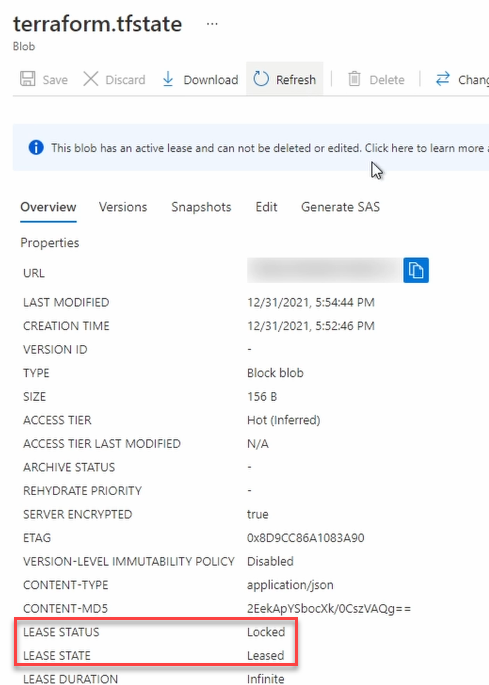

Storage Account Lease Status

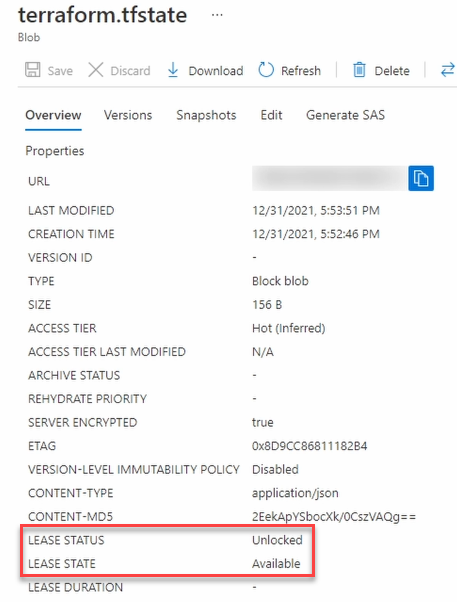

A shared backend provides a central location for the state file, allowing multiple reads from a single location. But what if two people apply an update at the same time?

An Azure Storage Account has a locking mechanism that will prevent multiple applies from happening simultaneously. If we go to the storage account in the portal, then to data storage and open containers.

Click on the state file, terraform.tfstate, for this example. Notice the lease status and lease state show unlocked and available.

When terraform init, plan or apply are ran, the lease status changes to locked and lease state changes to leased as shown below.

IMAGE Lease Locked

Locking the file prevents multiple actions on the state file simultaneously.

That is how to use an Azure storage account as a terraform remote backed.

5 thoughts on “Remote Backend State with Terraform and Azure Storage”

Pingback: Azure Top 5 for January 24, 2022 | Jeff Brown Tech

Hi Travis,

I don’t know why this tfstate file matters.

Since TF is a declarative language, everytime we terraform apply, it will auto generate the tfstate file.

So what is the point of storing this file in a centralized location?

Thanks

If you implement a ci/cd pipeline you need to have the state file in a centralized location. Every runner is a new machine that does not know anything about past deployments.

You will understand why the tfstate is important the 1st time you deploy a complex template, lose your tfstate file and have to destroy it all by hand. Without the tfstate, Terraform doesn’t know how to destroy what it has applied.

If you have multiple terraform stacks storing remote state in Azure Blobs, how do we import the state of one stack into another the way I do with Cloudformation exports from one stack to another. Is that not a thing in Terraform?